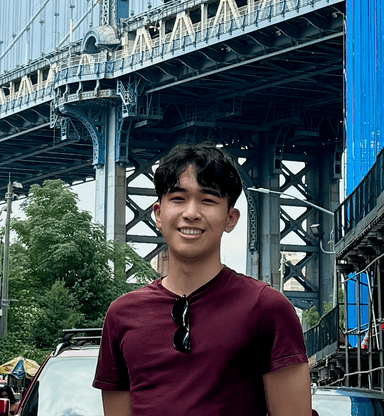

👋 Hi! I'm David. I'm currently a senior at UC Santa Barbara studying Computer Science and one of the founding ML Engineers at Alpha Design to build AI agents for chip design.

I'm also an undergraduate researcher in the UCSB NLP Lab, where I'm currently working on generating synthetic datasets for training and evaluating AI agents. My research interests lie primarily in Machine Learning, Natural Language Processing, and Distributed Systems.

In the past I've worked as a Software Engineer Intern at GlossGenius, Snowflake, C3.AI, and Viasat.

In my free time I like to go rock climbing, bake bread, play board games, eat good food, and go on runs!

Experience

Founding ML Engineer

Alpha Design | September 2024 - Present

Building RAG systems for long document and codebase understanding.

SWE Intern - Growth/AI

GlossGenius | June 2024 - September 2024

Built out a referral tracking system, AI onboarding experience, and new Platinum tier plan to support large teams.

SWE Intern - Snowpark Container Services

Snowflake | January 2024 - March 2024

Developed a CLI, REST API, and Python SDK for developers to interact Snowpark Container Services - a secure, managed platform to deploy containerized applications in Snowflake.

SWE Intern - Data Platform

C3.ai | June 2023 - September 2023

Implemented a performance monitoring API and dashboard for C3.ai's data platform to help engineers diagnose and fix issues.

SWE Intern - Automation

Viasat | June 2022 - September 2022

Designed and deployed a statistical model for the business team to predict project timelines based on JIRA metrics

Research

Ongoing Research

Automatic Task Generation for LLM Agents

Developing a scalable method for generating a dataset of realistic web tasks for training and evaluating AI web agents.

Past Research

(EMNLP 2023) MAF: Multi-Aspect Feedback for Improving Reasoning in Large Language Models

Multi-Aspect Feedback is an iterative refinement framework designed to enhance language models' reasoning abilities by addressing a variety of error types. The method incorporates multiple feedback modules, including frozen language models and external tools, each targeting specific categories of errors such as hallucinations, incorrect reasoning steps, and mathematical inaccuracies. Experimental results show improvements of up to 20% in mathematical reasoning and 18% in logical entailment tasks.

Read the paper